We have not discovered life on K2-18b.

We have not even discovered water on K2-18b.

All we can say is that the planet does not… not… have an ocean.

Read MoreWe have not discovered life on K2-18b.

We have not even discovered water on K2-18b.

All we can say is that the planet does not… not… have an ocean.

Read MoreIn-person conferences actually suit bugger all nobody, and academia needs to become as invested in realising the potential of virtual experiences for scientific progress as we are in developing the next generation of instruments. (A look at going virtual beyond the in-person experience.)

Read MoreWe need to talk about K2-18b.

You know why.

We shall begin with a plot re-cap.

K2-18b is an extrasolar planet, orbiting a dim star known as a red dwarf. As the planet slid across its star, light from that central ball of stellar fusion passed through the planet’s atmosphere. This was detected with the Hubble Space Telescope, which noted that wavelengths of light typically munched up by water molecules were missing. Thus occurred the first detection of water vapour in the atmosphere of an exoplanet that is smaller than Neptune… and that orbits in the habitable zone.

Is this exciting? HELLA YES.

Should we all be focussed on the last five words of that re-cap? HELLA NO.

Why?

Because the habitable zone means absolutely nothing for this planet.

And you would die there.

Now at this juncture, I feel you burning to stop this planetary crusade. “Wait a minute!” —you shout— “We’ve heard this spiel from you before. The habitable zone is just a region around a star where the Earth could support liquid water. Any old Bob, Dick or Henrietta of a planet can saunter in and orbit without any other Earth-like properties whatsoever.”

Average temperatures of your favourite habitable zone worlds. The moon doesn’t really do average temperature, as it lacks at atmosphere to do the averaging. So lunatics get a scorching day and frozen night. They die. Just like you would.

That is true. Well done for remembering.

“Buuut this time, we’ve detected water vapour!” —you persist— “So we know that the planet HAS ITSELF SOME SEAS! And everyone knows, that makes for some sweeeeeet alien lattes!”

No. NO. NOO! I try to inject, but you blaze on unperturbed:

“Sure, habitability is complex and we need more than water to thrive. But the detection of water on a habitable zone planet makes K2-18b the absolutely scrumptious best candidate for a world teaming with small furry creatures we’ve ever ever evvvvvvveeeeerrrr seen!”

At this stage, I post a cute meme to the internet and wait for you to collapse in an exhausted heap of alien world ecstasy.

— Elizabeth Tasker (@girlandkat) September 12, 2019

( • . •)

/ >🌚yes, I will tell you all I know about planet diversity.

( • - •)

🌚< \ stop... stop calling them all Earth 2.0.

Now that we’re settled, let me tell you how you are guaranteed to die on K2-18b (if you want a cup of tea, go and get it now).

K2-18b weighs in at 8 times heavier than the Earth, with a size 2.3 times that of our planet. This gives the planet an average density around 3.3 g/cm3.

This density is tricky. It’s similar to Mars (3.9 g/cm3) which initially seems promising; Mars being a rocky world that we believe may have been habitable in the past. But Mars is a squiffy excuse for a planet. It only has 1/10 of the mass of the Earth. As planets beef up, gravity exerts a greater squeeze that ups the density. The Earth has an average density of about 5.5 g/cm3 and if K2-18b had a similar composition, we’d be looking at a density of ballpark 10 g/cm3 [1] <-- these are references at the bottom of the article, see how professional we're getting?.

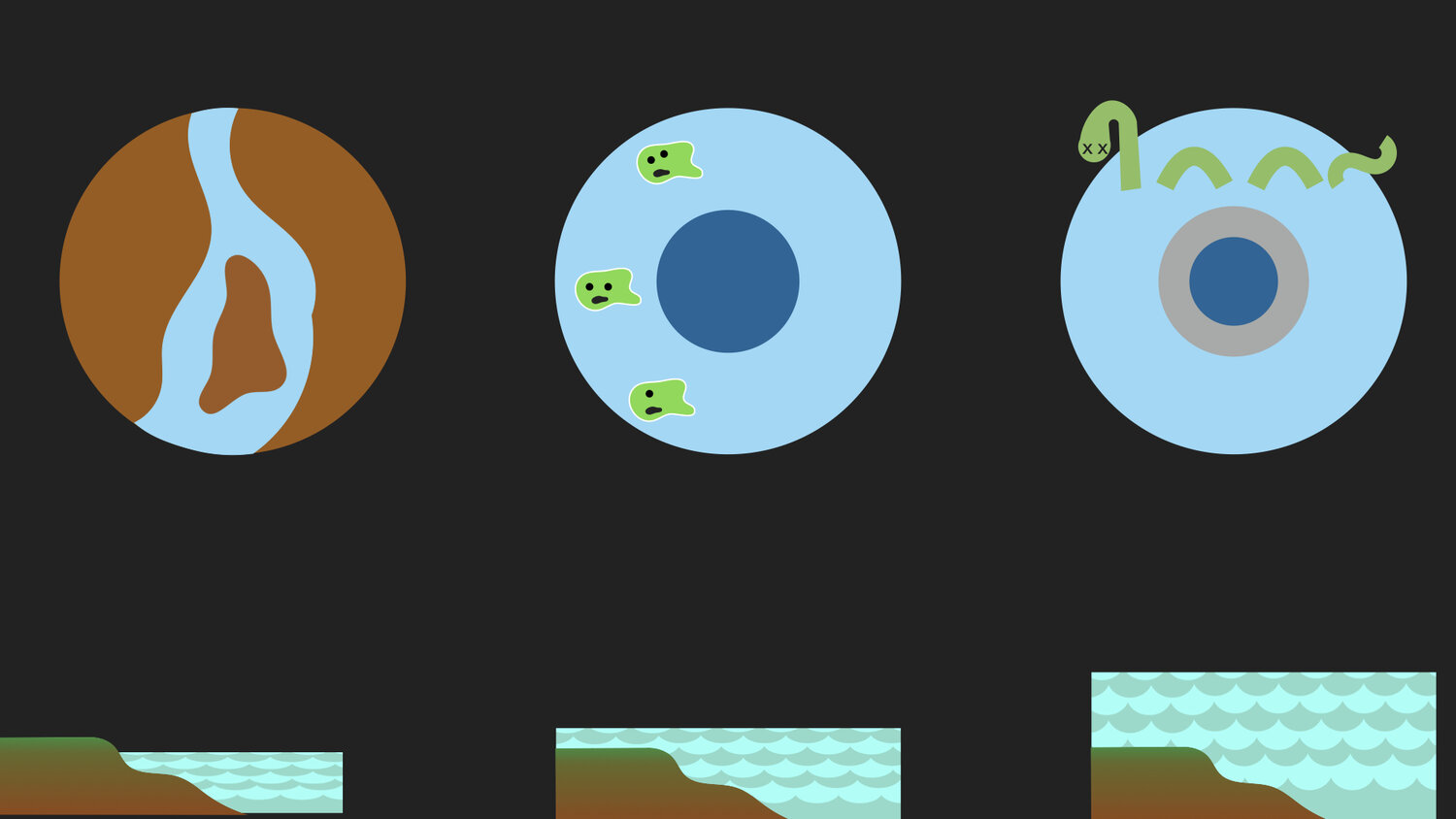

To lower that density, we’ve got to mix our Earth-y silicate existence with something light and fluffy. For this planetary cookery class, there are three main options:

Fatal Option #1: A thick atmosphere of light gases such as hydrogen and helium.

Fatal Option #2: A truck load of water.

Fatal Option #3: A funky hybrid mix of the fatal hydrogen and fatal water.

Excited? Who wouldn’t be?! Let’s start with Bachelor Planet #1.

While the Earth doesn’t have a strong enough gravitational pull to hold onto light gases such as hydrogen and helium, there’s no real question that K2-18b has a hefty stockpile in its atmosphere. The detection of water vapour was published in two independent studies [2] and both find the data is best matched by a hydrogen-dominated atmosphere. Moreover, previous empirical eyeballing of exoplanets [3] has found that once you start approaching a radius of 1.5 Earth, planets swell in size but not mass, which is conducive to acquiring a thick cloak of light gases. If we claim that the low density of K2-18b is entirely due to these light and fancy-free elements, then a mass fraction of about 0.7% in hydrogen and helium should give us the required density [4].

So less than one percent? I bet you’re thinking that’s no big deal! What feeble life couldn’t handle that?!

You. You couldn’t handle that.

It turns out a splash of hydrogen goes rather a long way. Writing in the Astrophysical Journal, Eric Lopez and Jonathan Fortney offer a particularly delicious analogy [4]:

A 0.5% Hydrogen/Helium atmosphere leads to a surface pressure twenty times higher than that at the bottom of the Marianas Trench (deep. You can’t live there), and the temperature would be more than 2700°C (hot. You can’t live there).

“WAIT A MINUTE!” —you shout— “The planet is in the habitable zone! How did we get to surface temperatures of thousands of degrees?!”

The problem is that the habitable zone (as used for exoplanets discoveries) is defined as the amount of starlight the Earth needs to keep temperate surface conditions. Our planet can adjust the surface temperature (on rather long geological timescales) by altering the level of carbon dioxide in the atmosphere through the carbon-silicate cycle. As carbon dioxide is a greenhouse gas that traps heat, lowering its level cools the planet while letting it accumulate gives the planet more of a cosy blanket. Within the habitable zone, this thermostat works well. But beyond its edges, the carbon-silicate cycle can’t manage its job and the planet either boils or freezes.

Within the habitable zone, the Earth can adjust the level of carbon dioxide in the atmosphere to keep surface conditions comfortable.

So the habitable zone is the region where the carbon-silicate cycle can put the right amount of carbon dioxide into the Earth’s atmosphere to keep the surface temperature comfy.

Got a planet with no carbon-silicate cycle?

Or a world with a different atmosphere composition?

Then that habitable zone don’t mean jot. (And you all really know this as Mars and the Moon are in the habitable zone but don’t offer lakeside retreats.)

The fact that atmosphere of K2-18b is dominated by hydrogen therefore utterly invalidates our habitable zone ticket. Like carbon dioxide, hydrogen is a greenhouse gas, giving K2-18b an extra thick thermal coat that it can’t shrug off. Therefore even if the rest of the planet was hypothetically entirely Earth-like with a carbon silicate cycle, the planet’s surface would be far far warmer within the habitable zone than the Earth.

Hydrogen is also a greenhouse gas, making planets too hot in the classical habitable zone.

In short, you’re squashed flat and roasted. Got it? Excellent. Let’s move on to Planet Bachelor #2: the truck load of water.

…. where we are going to use the same logic to die horribly. Again.

If we ignore the hydrogen detection in the two discovery papers (or assume it’s somehow suuuuuuper low), then we can match the low density of K2-18b by employing a thinner, more Earth-like atmosphere but mixing-in a whole load of water into the silicate rock. The problem is that the amount we need… is rather more than what’s in your kitchen sink.

To match the density of K2-18b, the planet’s mass would need to be as much as 50% water [2]. By contrast, the Earth has less than 0.1% water by mass. And while all life on Earth needs water, too much can geologically murder the entire planet.

The carbon-silicate cycle works best when there’s exposed land. Before we reach 1% water, the planet will likely become an ocean world and the land sinks below the waves. If the sea is shallow enough, a more pathetic carbon-silicate cycle can still work with the sea floor. Ramp that up by pouring more water into the planet, and the weight of the ocean on the seabed will trigger the production of deep sea ices. These ices seal off the silicate rocks from the water, shutting down the carbon-silicate cycle as carbon dioxide can no longer be stashed away in the ground. Not only does that mean the habitable zone shrinks to a thin strip as the planet becomes unable to adapt to different levels of starlight, but it also prevents the cycling of nutrients such as phosphorous from the planet interior to the ocean. Even with perfect positioning, life therefore gets throttled due to lack of nosh.

Up the water content on the planet to several percent of its mass, and the pressure of the water can shut down plate tectonics. The exact role of the motion of our crustal plates in the habitability of the Earth is not fully understood, but it is thought to be a key player in nutrient cycling and magnetic field generation that protects our atmosphere. In short, by the time you have shut down plate tectonics, water has rendered your world well and truly geologically dead.

There is a possibility that an ocean world could develop alternative mechanisms outside the carbon-silicate cycle for temperature modulation [5]. But different mechanics may require a different levels of starlight, resulting in a new habitable zone that has different boundaries from the classical carbon-silicate cycle definition. An ocean world K2-18b in the regular carbon-silicate habitable zone is therefore not a mecca for alien lattes[**].

Could we rescue this situation with hybrid Bachelor Planet #3? Mishmash a splash of hydrogen atmosphere with mega ocean but avoid the pitfalls of both?

No.

Because you can’t minimise both the ocean and the hydrogen atmosphere, and even much smaller abundances than that suggested above would kill the planet.

The bottom line is that K2-18b is not a potentially habitable world and it is not where we would focus our resources in hunting for biosignatures. Measurements of the planet’s density require either a thick hydrogen atmosphere and/or a deep ocean. These un-Earth-like environments mean that the habitable zone does not apply to K2-18b and moreover, they suggest a world too hot and geologically dead to support life.

So is the discovery of K2-18b notable at all?

ABSOLUTELY. For three main reasons:

We’ve detected water in the habitable zone. While K2-18b is not a potentially habitable world itself, the presence of water suggests one of life’s key ingredients may be easy to come by on more Earth-like rocky worlds.

Being able to detect a planet’s atmosphere is crazy hard. But it’s this information that tells us what a planet is truly like, from surface conditions to geology to potential biology. And we’ve just done it for a planet in the habitable zone. It’s going to be the start of an amazing slew of information, not just about potentially habitable worlds but ones that might be far more alien than anything we’ve dreamed about. And on that note…

Planets like K2-18b that are larger than the Earth but smaller than Neptune are called ‘super Earths’ or ‘mini Neptunes’. They appear to the most common size of planet in our galactic neighbourhood but we… ain’t got one. Are these large worlds more like giant terrestrial planets or teeny gas giants? Do they form in-situ or migrate from somewhere else? Can they have an active geology or are they all crushed by water or gas? K2-18b orbits a bright but small star and has an atmosphere not hidden by clouds. It will be the perfect planet for observations with up-coming instruments such as the JWST, which will be able to detect far more molecules in the planet’s atmosphere and shed some light on this mysterious of all planet classes. Frankly, this excites me the most. After all, for Earth 2.0… well, we already got one.

The water vapour on K2-18b is an awesome discovery: it’s a huge step to discovering what planets beyond our own Sun are really like. But it’s not the habitable world you’ve been searching for.

You would die there. Stop thinking about going.

[**] Edwin Kite (University of Chicago) reached out to me to note that a possible cycle exists in which carbon dioxide could be exchanged directly between ocean and air. While very different from the carbon-silicate cycle, the level of starlight required turns out to be similar, potentially allowing a water world to be temperate within the regular habitable zone. Could this save K2-18b? It’s not clear, as this is very new theoretical territory, with no terrestrial example. The planet would still have to lose most of its hydrogen atmosphere in order not to have a profusion of greenhouse gases that would boost its temperature. But it would be a great location to start investigating alternative planetary cycles to our own.

Unfashionable facts:

Estimate based on Weiss & Marcy, 2014 for a rocky planet without a thick atmosphere.

Detection papers [Benneke et al, 2019] and [Tsiaras et al, 2019].

A bunch of papers have noticed this break around 1.5 Earth radii where rocky planets seem to acquire deep atmospheres, including Weiss & Marcy (2014), Rogers (2015), Chen & Kipping (2016) and Fulton et al (2018).

Based on Figure 9 in Lopez & Fortney, 2013.

For example, ‘the ice cap zone’ by Ramirez & Levi, 2018 or a cycle just between ocean and air by Kite & Ford, 2018.

People. We need to have another chat.

We have all these exoplanets. Some of them are deliciously Earth-sized. And you want to know which are the most habitable. But here’s the thing…

You can’t.

NOT POSSIBLE. CAN’T BE DONE. DENIED. I don’t even care what you read in the Daily Mail last week.

There’s no way of creating a quantitive scale that actually measures how capable a planet is of supporting life. No matter how these habitability metrics are constructed They. Just. Don’t. Measure. Habitability.

Why? I’m so glad you asked. Take a seat. This ain’t going to be difficult.

First let’s crush the ultimate temptation: Why isn’t an Earth-sized planet definitely habitable? Because this:

Our Solar System has two Earth-sized planets: the Earth and Venus. Venus is 95% the size of the Earth, yet its surface would melt lead. And you.

Every time you see “Earth-sized planet” and feel tempted to pack a suitcase, remember this is equivalent to saying “Venus-sized” and see if you still feel like quaffing down an out-of-this-world Starbucks coffee.

Buuuuuuut (I hear you scream) there are so many more planet properties we can measure to calculate the perfect exo-real estate!

NO. WRONG.

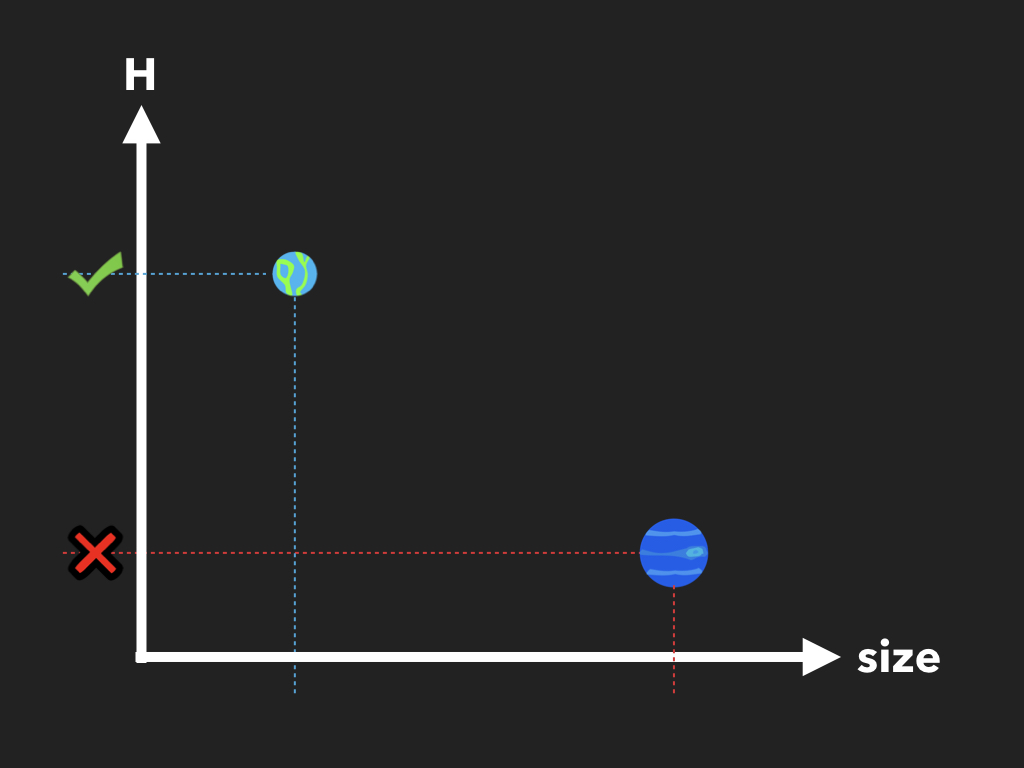

While we’ve discovered nearly 4,000 exoplanets, the amount known about each world is pretty sparse. For most of the planets out there, we only know radius and the amount of radiation the planet receives from the star. Just two properties.

There are three important points to realise at this juncture:

Two is a small number. If you had only two jelly beans, that would be sad.

No matter how complex you make your habitability metric, it can only depend on two measurements. Any other quantities will need to be estimated from those two.

This is like designing a skin-tight superhero suit with exact measurements for torso length, foot size, knee height, shoulder width, arm and leg length, waist, hip and head size but actually just measuring your waist and height and estimating the rest from those two measurements. Sounds doable? Ask any woman about getting jeans to fit.

The two properties you can measure are not directly related to habitability. Whether a planet can support life depends on the surface environment (or potentially sub-surface for life underground). Venus is proof that the radius and level of starlight really tells you jack. Not only does the planet have nearly the same radius as the Earth, but it receives only about twice the amount of starlight. If Venus had an Earth-like atmosphere, then the surface temperature would be somewhere in the 50°C (~ 120°F) range. Certainly a little toasty, but a far cry from the true surface temperature of 460°C (fuck no°F). The difference is Venus’s thick carbon dioxide atmosphere, which traps heat like…

… seriously, nothing on Earth comes close. That’s the point.

OKAY OKAY OKAY. WHAT IF we could measure more? While our current telescopes have been focussed on finding these planets, the next generation may be able to discover properties such as if they have surface water or identify gases in their atmospheres. If we could measure a few basic properties about the surface environment, could we create an accurate habitability scale?

Here is your problem: this is an accurate diagram of all the planets we know support life:

The only planet we know that supports life is the Earth. That gives us just one data point. And you can’t make any kind of meaningful scale with just one point.

Don’t believe me? Let’s give that a go.

We know a planet can be habitable if it is the same size as the Earth (even if that isn’t always true). But does a planet become more or less able to host life if you change the size? Without more habitable planets of different sizes to compare with, the scale could go up or down as you increase in size.

But but but (you shout quite reasonably) we do know of many uninhabitable planets. Can’t we use this to constrain the radius? You’re absolutely right and we can… a bit.

Based on a handful of planets that have both radius and mass measurements, astronomers spotted that planets larger than about 50% larger than the Earth were typically quite low density. This suggests those planets have very very thick atmospheres, like Neptune.

Neptune is definitely not habitable. Let’s use a “50% larger” as a size limit. The problem is that y’all wanted a habitability scale. So these two points have to be connected by…. what?

The Earth is the largest rocky planet in our Solar System and Neptune is the smallest gaseous world. We don’t have any data points to tell us how the environment would change as the Earth increases in mass. A slight increase in mass might not change habitability at all. Or the extra gravity might flatten our topology, immersing the land under water and cutting off weathering, to result in the planet freezing into a snowball of death while the Sun was still young. Whichever. We’ve absolutely no way of knowing.

The best we can do is say 50% larger = probably bad. Below that…. pray to your gods.

What this means is when you see a fancy-smancy habitability law that looks all like:

You need to be thinking like the embittered, twisted and cynical soul you know you really are and say coldly:

R, M, D and T are likely based on only two actual measurements, neither of which directly relate to the surface property.

The values a, b, c and d supposedly tells you how the habitability (H) changes as you vary that property (R, M, D and T). But there’s no way we have a damned clue what they should be.

The resultant H is as meaningful as my star sign. Now go get this cancer a beer.

When hunting for planets that might be habitable, the best that can be done is to slam down some limits. A planet larger than 50% that of the Earth will probably have choked its surface under a Neptune-like fug. A planet that receives far less starlight than us risks being too cold for talking. Or living. Not good options for scoping out alien neighbours.

What we can’t do is develop a scalable index for habitability and expect a planet with H = 0.7 to be less likely to support life than a planet with H = 0.75.

Can’t be done on just two measurements and one example of life.

Pretty obvious when you think on it, right?

“Hey Elizabeth, do you know what the NASA press conference is about tomorrow?”

I hadn’t a clue. Having stepped off a plane from Japan the night before, I was twirling around in a swivel chair in one of the student offices at McMaster University while I tried to bully my brain into action. Until that moment, I wasn’t aware NASA had announced a press conference.

The NASA vintage travel poster for one of the Trappist-1 planets.

The NASA site did not reveal much. Tomorrow’s event was to “present new findings on planets that orbit stars other than our sun.” It was exoplanet news, but the lack of details left us speculating.

“It’s an atmosphere detection for Proxima Centauri-b!”

“Can’t be. The planet doesn’t transit.”

This fact made our nearest exoplanet something of a disappointment. Proxima Centauri-b had been found by detecting the slight wobble in the position of the star due to the planet’s gravity. However, without an orbit that took the planet between star and Earth, there was no opportunity to examine starlight passing through the planet’s atmospheric gases. Such a technique is known as ’atmospheric spectroscopy’ and can uncover which molecules are in the air to reveal processes that must be occurring on the planet’s surface — the location relevant to habitability. The next generation of telescopes including NASA’s JWST and ESA’s Ariel are focussed on using this method to finally probe planet surface conditions. The uselessly orientated orbit of Proxima Centauri-b however, removes it from the target selection lists.

This took us back to the problem of what NASA were about to announce.

“It can’t just be another planet.”

“It could be a possible biosignature?”

“… do we have anything that could measure that yet?”

This was the crux of the mystery. It is amazing that in the scant 25 years since the first exoplanet discoveries, finding a new world beyond our solar system has become insufficient to warrant a press conference. We now know of nearly 3,500 exoplanets, roughly a third of which are less than twice the size of the Earth. The news had to be bigger than a simple additional statistic.

However, a discovery of alien life seemed to be too premature. It is true that the presence of biological organisms may be detected by their influence on a planet’s atmosphere. It is also true that the Hubble Space Telescope (HST) can do atmospheric spectroscopy, although not nearly at the resolution of the future instruments. As far as I am aware, HST has examined the atmosphere of three super Earth-sized planets and only seen features in 55 Cancri-e, which orbits so close to its star that a year is done in hours. So … a biosignature was not impossible. It just would have meant we had got very very very very very lucky.

Nobody’s that lucky. Especially not in 2017.

We were evidently not alone in our speculation, since the news was leaked later that day. Seven Earth-sized planets had been discovered orbiting the ultracool dwarf star, TRAPPIST-1. It was a miniature solar system and NASA were about to infuriate me by gabbling non-stop about the prospect of life.

Let’s make something clear:

Apart from roughly the same number of planets (by astronomer standards, 7 basically equals 8. Or 9.) the TRAPPIST-1 system is very unlike our own.

That is what makes it cool.

Also, the system takes its name from a Belgian beer.

Trappist beer. Oh yes.

Last year, three planets were discovered around TRAPPIST-1. The star was named for the telescope that was used in the discovery, the robotic Belgian 60cm ‘TRAnsiting Planets and Planetesimal Small Telescope’. It sounds like a perfectly reasonable acronym until you learn that Trappist is a Belgian brewing company. Astronomers have no shame. It’s all kinds of wonderful.

The news was that further inspection of the system had added another four planets. The fresh observations had used a number of telescopes around the world and finished with an intensive stint on NASA’s infrared Spitzer Space Telescope.

(Interesting fact: Launched in 2003, Spitzer was never designed to be able to see planets. Some swanky engineering tricks from the ground allowed a 1000 times improvement in measuring star brightness that led to the tiny dip from a transiting planet being detectable. Cool stars like TRAPPIST-1 are a 1000 times brighter in the infrared than at optical wavelengths, making Spitzer a kick-ass planet grabbing machine.)

What was still more exiting is that all the planets transit, leaving the door wide-open for some rocky planet atmosphere spectroscopy rock n’ roll.

Were alien climates ammonia cloudy with a chance of methane meatballs? The next five years might reveal the answer to that question.

The planets were all on short orbits, with years lasting between 1.5 and 13 days. This close packed system meant that neighbouring planets would appear larger than the Moon in the night sky. The in-your-face sibling-ness also allowed for the planet masses to be measured.

While transit observations normally yield only the planet radius, the gravitational tugs from planets in the same system can vary the time between successive transits. These ‘transit timing variations’ can be used to estimate the size of the tug, and thereby measure the mass of the planets. With the exception of the outermost planet —whose single transit measurement is only enough for a radius estimate— the TRAPPIST-1 planets got both radius and mass measurements.

And you know what that means.

(Density. It means density.)

In fact, the mass measurements were not particular accurate, leading to error bars as large as the measured value except in the case of planet TRAPPIST-1f. However, all measurements hinted at (and Ms Accurate TRAPPIST-1f agreed) that these planets were on the fluffy side.

With sizes less than the empirical threshold value 1.6 Earth radii, the planets were unlikely to be Neptune-like gas worlds. But their low density suggests they do have a much higher fraction of volatiles than the Earth. They could even be downright watery.

This possibility is backed up in a less obvious way by the planet orbits. The inner six worlds are in resonance, meaning that the ratio between their orbital times can be expressed as two small integer numbers. So while the innermost world orbits the star 8 times, the outer planets orbit 5, 2 and 2 times.

Well… almost. And since we declared above that 7 basically equalled 8 or 9, I’d say we were good.

Strings of planets in resonance are completely unsurprising and utterly predictable.

… so long as you formed somewhere entirely different.

Transits of the seven TRAPPIST-1 worlds. The orbits shown in the first few seconds show the resonance between the planets.

Resonant orbits between neighbouring planets occur when young planets migrate through the planet-forming gas disc. This gas migration can occur once the growing planet reaches the size of Mars and its gravity begins to pull on the surrounding gas, which pulls back. The net force usually sees the planet move towards the star. If multiple planets take this site-seeing tour of their system, their mutual gravity will pull on one another. These tugs only balance out when the orbital times form integer ratios, producing a resonance. The predicted result is a series of planets in resonant orbits close to the star — exactly what is seen in the TRAPPIST-1 system.

If the planets formed in cold outer reaches far from the star, then a substantial part of their mass would be in ice. As the planets moved towards the (ultracool but still a nuclear furnace and way hotter than Colin Firth in Pride and Prejudice) star, the ice would melt into water or vapour. This would explain the low densities compared to the Earth’s predominantly silicate composition.

Three of the TRAPPIST-1 planets stopped their mooch inwards within the star’s temperate zone (or ‘habitable zone’ if you must). This is the region around a star where an exact Earth clone could support liquid water on the surface.

Once more for the cheap seats at the back?

EXACT EARTH CLONE.

If you’re not an exact Earth clone, then the temperate zone guarantees as much as one of Nigel Farage’s Brexit bus adverts.

So how Earth-like are these temperate zone wannabes? On the plus side, they likely have plenty of water. On the down side, it’s quite likely too much.

While the majority of the Earth’s surface is covered with oceans, water makes up less than 0.1% of our planet’s mass. If we had formed further out where water freezes into ices (i.e. past the ‘ice line’), then that fraction could be nearer 50%. This would create huge oceans as the planet warmed, enveloping all land under a sea a bajillion fathoms deep (exact measurement. Prove me wrong.)

The bottom of such a monstrous ocean would be so high pressure than a thick layer of ice would separate the water from the rocky core. This would scupper the carbon-silicate cycle, preventing the quantity of carbon dioxide in the air responding like a thermostat to global changes in climate. This would mean anything other than the absolute perfect amount of stellar heat would render the planet uninhabitable. The temperate zone would shrink to a thin slice and any slight ellipticity in the planet orbit, or variation in the star’s heat, would fry or freeze everything in site.

It ain’t impossible for life, but it ain’t promising. It also ain’t Earth.

Even if the oceans were shallow enough to avoid this, the icy composition of the planet might burp out a crazy atmosphere. Our atmosphere was outgassed in volcanic eruptions during the Earth’s early years. But if the planet was made not of silicates, but of comet-like ices, then the gasses emerging from the volcanos would likely be mainly ammonia or methane. Not yummy. Also strong greenhouse gases, so could end up roasting planets within the temperate zone.

Since we’ve no analogue of such planets in our own solar system, it’s hard to speculate on their surface conditions. Could such a rocky ice mix produce a magnetic field? The icy Jovian moon, Ganymede, has a weak field, so it could be possible. If it is not, then any atmosphere might be stripped by the star’s stellar wind.

Google doodle celebrating the Trappist-1 system

The fact we’ve not the foggiest idea of what these worlds would really be like is why they’re so exciting. Here we have 7 prime candidates for atmospheric studies and we’re hoping to see not the same thing as beneath our feet, but something entirely new. This would tell us about how planets form (really migration? really ices?) to how a completely non-terrestrial geology behaves. It’s going to be so much more awesome Ewoks.

So are we going to give these planets better names than TRAPPIST-1b, c, d, e, f and h? Speaking at the NASA press conference, lead author Michaël Gillon admitted,

“We have plenty of possibilities that are all related to Belgian beers, but we don’t think that they’ll become official!”

DAMN YOU, IAU.

In better news, NASA has designed a new travel poster to mark the occasion. And there’s a google doodle. Yay.

My weekend slid downhill when I began an article that started:

"The discovery of alien life could be a step closer after scientists found a newly discovered planet is ‘likely' to harbour life forms."

My friends, that be pretty big talk for a planet for which we only know the minimum mass.

The planet in question is Proxima-b, whose discovery around our closest star was announced in August. You may remember its name from my previous editions of OMG-PLANET-NEWS-GET-UR-SHIT-TOGETHER.

This article (in the UK newspaper, the Independent) covered recent research published in the scientific journal, MNRAS. Unfortunately, it represents the work more poorly than the Hollywood adaption of your favourite novel. One you really, really liked.

Now, I too find reading research papers a drag: they’re dry, gloss over the exciting scrumptious bits in favour of a parameter space study and sometimes the graphs aren’t even in colour. But this particular paper was less than five pages. FIVE. And that includes the plots. And those five pages do not discuss the chances of Proxima-b being inhabited by anything.

Let’s take a look at “In innards of Proxima-b: how the movie differs from the book".

The research paper asks a simple question: If we assume Proxima-b is a rocky planet, what might it be like?

Did you notice that summary started with an “if”?

Because Proxima-b has not been observed passing in front of its star, we don’t know the planet’s radius. Instead, astronomers have measured the slight wobble in the star’s position due to the tug from the planet’s gravity. This tells us how much the planet is pulling the star towards the Earth and that gives us a handle on its mass. However, since we don’t know the angle of the planet’s orbit, we can’t tell whether the planet is pulling the star directly towards the Earth, or if only part of its tug is in our direction. The upshot is we know only the lowest possible mass for the planet, with its true value being potentially much higher.

Minute Physics runs through how to find exoplanets (explaining the transit and radial velocity techniques).

Proxima-b’s minimum mass is ~1.3 Earth masses; a value that suggests (but still doesn’t guarantee) a rocky surface. The maximum value would make the planet a gas giant such as Neptune.

For this paper, the researchers are only interested in the outcomes for a rocky composition. This leads them to consider only the follow situation:

(1) The MINIMUM MASS of the planet is the TRUE mass. Since every measurement has errors, they actually consider the planet has a mass between 1.1 Earth masses - 1.46 Earth masses.

(2) The planet has a thin Earth-like atmosphere, not a thick envelope like Neptune.

(3) The rock composition is similar to that found in the solar system, with the planet having an iron core, silicate mantle and ice or water top layer.

There is no observational evidence at all for any of these points. A familiarly solid base for the planet is assumed, and then the research asks what permutations are possible. To say this suggests Proxima-b is like Earth is akin to filling a pen with red ink and then claiming this proves all pens write in red. It’s nonsensical and it’s not the point of the paper.

The paper considers three possible masses for Proxima-b, within the error bars that surround the minimum possible value: (1) 1.1 Earth mass planet, (2) 1.27 Earth mass planet and (3) 1.46 Earth mass planet. The authors then tweak the relative amounts of core, mantle and water to see what worlds result.

To put limits on the possibilities, the research assumes a mix of silicate, iron and water typical of planets, asteroids and comets found in the solar system. Planet models that have more water than most solar system objects, or huge cores are dismissed as implausible.

The authors placed down these boundaries as the solar system is only place where we have data on what ranges are reasonable. However, Proxima-b’s star is not like our sun. Instead, it’s a dim red dwarf with a different mix of elements. It could therefore be that the rocks available to build planets have a very different blend than those around our own sun. Such differences can lead to drastic changes in planet conditions, such as producing carbon worlds with diamond mantles and seas of tar.

However —again— we have to work with the data we have. Which is very little for the Proxima system.

The result of the paper is not a single favoured model, but a range of possibilities for a rocky Proxima-b. A Proxima-b with a 1.1 Earth mass but radii between 1.2 - 1.3 Earth size could contain 60 - 70% water, compared to our own Earth’s minute 0.05%. On the other hand, an Earth-sized planet of that mass could contain no water but a fat iron core. The total composition range (for conditions 1 - 3 above) is a planet made from 65% iron / 35% rocky silicates (matching a radius of 0.94 x Earth) to a 50% silicate / 50% water world (radius 1.4 x Earth), with 200 km deep liquid ocean.

While the inspiration of the paper was Proxima-b, there’s nothing really particular about this calculation that applies only to this planet. The results are true for any world around 1.3 Earth masses.

Should the radius of Proxima-b ever be measured, these models could help narrow down possible planet conditions or even rule out the planet being rocky at all. However, it’s worth noting that even for an exact radius and mass, different combinations of water, silicate and iron are still possible. At present, there is no way of selecting a more probably model amongst any of the options.

So do these possibilities say anything about habitability? Not a jot.

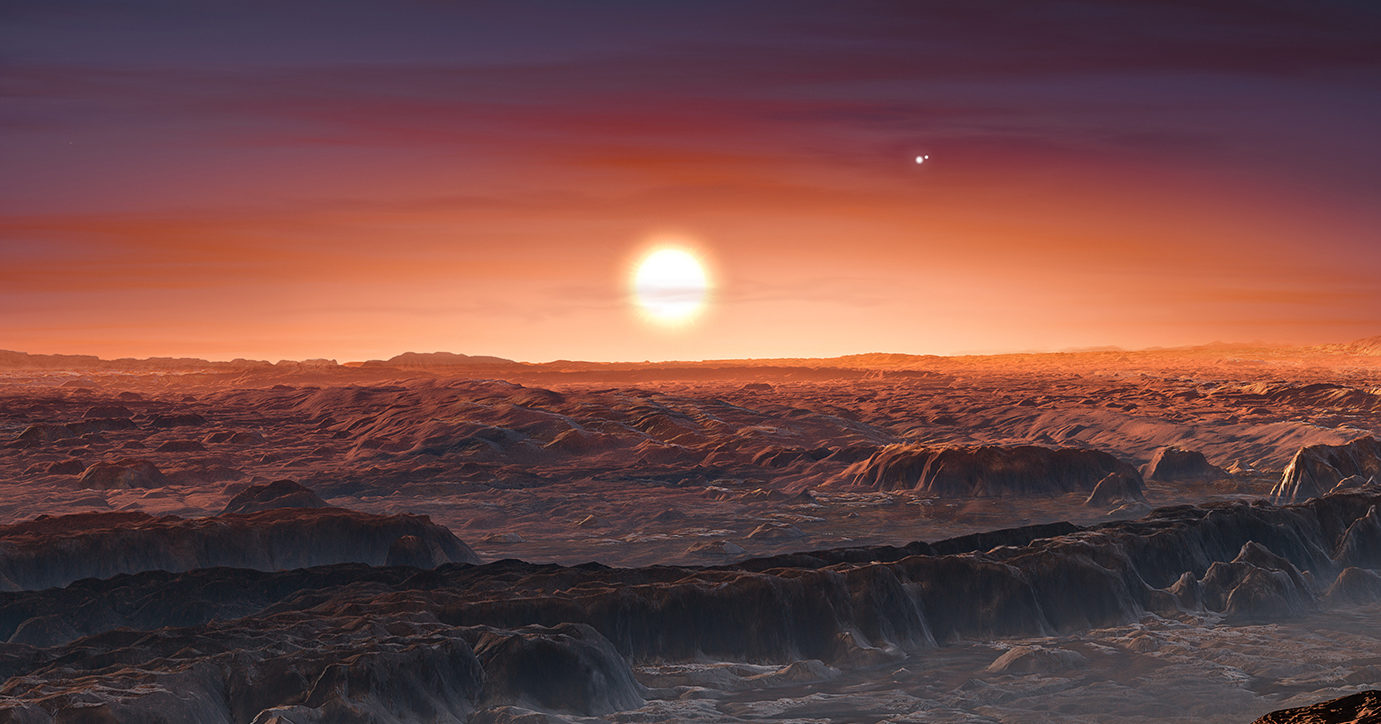

When you only know the minimum mass of a planet, even an artist impression endorsed by ESO isn't based on a whole lot.

Should water be present, life would get a helpful medium for some biochemistry action. But this is only one of many many (many many) factors. The changing iron core size is liable to affect the magnetic field; a likely essential component of any planet orbiting a red dwarf. These dim stars may sound benign, but they are prone to violent outbursts of energy that could strip a planet’s atmosphere without the protection from some heavy duty magnetics. A thick mantle will have a baring on plate tectonics and is liable to determine the gases in the atmosphere. The deep water world may also have a thick layer of ice that cuts off the silicon surface from the ocean, preventing a carbon-silicate cycle of elements that helps control planet temperature on Earth.

We cannot be anymore quantitive about these properties, since we don’t know the surface conditions on any exoplanets. The next generation of instruments are just beginning to be able to sniff the atmospheres of these new worlds. This may provide us with the first clue of what the surfaces could be like.

This paper was a neat modelling experiment that drives home how varied a planet could be, even with a huge number of assumptions. So how did the news article get the message quite so wrong?

My guess is that the writer did not read the journal paper at all (despite quoting it as the source) but took the information from the very beginning of the press release by CNRS: the ‘Centre National de la Recherché Scientifique’ in France, and home institute of the research authors. The press release overall isn’t bad, but the opening paragraphs are misleadingly phrased and contain the statement; “[Proxima-b] is likely to harbour liquid water at its surface and therefore to harbour life forms."

No dude, that just ain’t true. Water is a possibility for the planet’s composition, but the research doesn’t promise that any is there.

Taking this as the full research, the news article then quotes the lead author seemingly collaborating this statement. While it’s hard to know without hearing the interview verbatim, I suspect this is an example of poor editing. The author apparently told the newspaper:

"Among the thousands of exoplanets we have already discovered, Proxima-b is one of the best candidates to sustain life."

With only the minimum mass measured, there’s no reason Proxima-b is more likely to harbour life than many other exoplanet discoveries. However, it is true the proximity of the planet makes it an excellent candidate for more detailed observations.

"It is in the habitable zone of its star, [and] even if it is really close to the star the fact that Proxima Centauri is a red dwarf allows the planet to have a lower temperature and maybe liquid water."

Note, the author said “maybe” here. Like the Earth, it is unlikely that Proxima-b formed with liquid water: its location close to the star would have been too warm for ice to be incorporated into its body. Instead, the planet would need to form further out and move inwards, or receive a delivery of icy meteorites from further from the star. Both are possible; neither are certain.

"The fact there could still be life on the planet today, not only during its formation, is huge."

I guess this is true, but its unsubstantiated based on the research paper, so I don’t understand the motivation behind the comment. I’m inclined to blame selective editing once again.

"The interesting thing about Proxima-b is it is the closest exoplanet to Earth. It is really exciting to have the possibility that there is life just at the gates of our solar system."

Yes. This is the main reason Proxima-b is exciting. We don’t yet know if the planet is rocky. We certainly don’t know if its surface conditions are similar to Earth. But while the world is too far to visit with current technology, its relative proximity gives the next generation of telescopes the best chance at finding out more.

--

Research paper: Brugger, Mousis, Deleuil, Lunine, 2016

Artist's impression of the surface of Proxima Centauri b. Credit: ESO

The third brightest star in the night sky is Alpha Centauri. It is our closest stellar neighbour, the fictional birth system of the Transformers, Small Furry Creatures and Pan Galactic Gargle Blasters… and now our closest exoplanet.

Alpha Centauri is actually a triple star system. It consists of a binary pair, Alpha Centauri A and B, and a more distant dwarf star called Proxima Centauri. It is around this dim third wheel that a planet has been detected.

The discovery has exploded my social media feeds. The new world has a minimum mass 30% larger than the Earth and receives a comparable amount of light and heat. Anyone familiar with exoplanet news knows this is sufficient to start packing a suitcase and buying shares in the inevitable Really-Star-Bucks coffee franchise.

Anyone familiar with my feed knows I am about to ice bucket challenge this baby.

To be fair, this is exciting. It's really exciting. In fact, I'm so excited I've ditched my morning chore of cleaning my newly empty apartment to sit on a cardboard box and write this post [*].

Let's start by disembowelling the description of those planet properties. Proxima Centauri b has been found by the Radial Velocity Technique: this is the slight wobble in the star's motion due to the pull from the planet's gravity. The bigger the wobble, the stronger the gravity of the planet and thus, the more massive the planet. All true, but it runs us into our first caveat:

CAVEAT #1: We only know the minimum mass

We can only measure the star's motion directly towards the Earth. This means we only see part of the planet's effect on the star.

This is like trying to judge how far a hot air balloon has moved by looking at its shadow. You'll probably underestimate the distance travelled, because the balloon has moved upwards (causing no change in its shadow position) as well as horizontally. You might think the balloon didn't need much gas to move such a short distance, but actually it needed a lot of fuel to climb vertically. Likewise, the Proxima Centauri's planet might be much more massive but most of its force is pulling the star 'upwards' compared to our line of sight.

Credit: ESO/L. Calçada

How close our measurement of 1.3 Earth masses is to Proxima Centauri b's true mass depends on the orientation of its orbit around the star. If we're looking at the orbit exactly edge on, then 1.3 Earth masses is the true value. If it's nearer to face-on, then the mass could be 70 times the mass of the Earth; the regime of the gas giants. If we assume the orientation is completely random, then the planet is most likely to be about 2.6 Earth masses.

So… what does this mean?

To gain even a rough inkling about what Proxima Centauri b is really like, we need its density. A high density would indicate a world with a solid surface, while a low density would suggest a Neptune-like gas giant. For density, we need size.

For all those headlines out there that have been proclaiming "Earth-sized planet discovered!" — be ashamed. We don't know jack about Proxima Centauri b's dimensions. This means the planet could be a rocky super Earth, or a gaseous Neptune.

However… if we were to take a guess… a rocky planet is likely. There is empirical evidence that planets smaller than about 1.5 x Earth size are more typically rocky than gassy. This boundary corresponds to a planet mass of roughly 4.5 Earth masses, assuming an Earth-like silicate rock composition. This is bigger than the most probable mass for Proxima Centauri b. So let's be optimistic and say we have a planet with a solid surface, but remember this is an educated guess based on only one measurement.

Let's move on to talk about the light and heat issue. Proxima Centauri b is much much closer to its star than the Earth is to the sun. In fact, it orbits at just 5% of our distance. That's way nearer than Mercury, which sits at 40% of the Earth-sun distance. A year on Proxima Centauri b is over in just 11.2 days. However, Proxima Centauri is a weakling among stars. It's a red dwarf with just over 10% of our sun's mass. It therefore only delivers 2/3rds of the radiation to Proxima Centauri b that we get on Earth.

This means that if you were to coat Proxima Centauri b in Earth's atmosphere, the surface temperature would be chilly… but it could support liquid water. That is, Proxima Centauri b is squarely in the 'Habitable Zone'.

CAVEAT #2: The Habitable Zone does not say ANYTHING about habitability.

I hate the term 'Habitable Zone' because any rational individual would believe it marks a location suitable for life. You know, with the term HABITABLE being right there in the name.

It doesn't.

All the 'Habitable Zone' means is that a planet with an Earth-like atmosphere and surface pressure could host liquid water. Do we have ANY INDICATION WHATSOEVER THAT PROXIMA CENTAURI b HAS AN EARTH-LIKE ATMOSPHERE?

One guess. Two choices. And the answer isn't 'yes'.

The Habitable Zone tells us nothing at all about the planet, only about its location. If Jupiter sat at the Earth's position, it would be in the Habitable Zone, but certainly not any more habitable. The Habitable Zone is still interesting, since it can be used as part of a selection tool for follow-up studies: with a zoo of over 3,000 known planets, we need to pick out the best candidates for further observations. But this doesn't mean we're selecting Earths.

The Habitable Zone also doesn't tell us that much about the star. Which brings us to the third caveat:

CAVEAT #3: Red dwarfs have behavioural problems.

Red dwarfs make up for their poxy size by spewing strands of stellar material called 'flares'. The sun has flares too, but Proxima Centauri has way bigger ones and the planet is much much closer. The net result is an X-ray bath at Proxima Centauri b that is 400 times that on Earth. This value is the present-day one: the early years of the star would have been far more dangerous. Such radiation levels (past or present) could strip the atmosphere, evaporate any water and nuke all life on the planet.

… or it could not. The Earth is protected from the sun by its magnetic field. If Proxima Centauri b has a molten iron core and some plate tectonic action, then it may have wrapped itself in a magnetic safety vest. Do we know? Not a clue — even if the planet is rocky, its ingredient mix might be entirely different to Earth. Even if it's got the same rocky recipe as Earth, there may still be no magnetic field: Venus is an incredibly close match to us in size and mass, but has next to zilch in the magnetics department.

The flaring action of the star leads to another issue...

CAVEAT #4: The planet may not exist.

Flares, star spots and general star action can produce wobbles as the star rotates that can look an awful lot like a planet. Planets are so tiny compared to stars that it is terribly terribly easy to mistake their faint whisper among stellar groans and creaks. The more rambunctious the star, the harder the detection.

The published signal for Proxima Centauri b looks reasonable, but the star's activity has led to scepticism. Independent observations are needed before we can be certain the planet is definitely there. If it does turn out to be a false positive, it will be in good company: in 2012, a Earth mass planet was announced around Alpha Centauri B, but later retracted when a fresh analysis of the data caused the signal to vanish.

If the planet does exist, its close proximity to the star may lead to another problem: it might be tidally locked. Like the moon and the Earth, one side of Proxima Centauri b may permanently face the star, while the other side is a land of perpetual night. Whether this creates a split world of deathly roast and deathly cold depends again on the planet's atmosphere. If the air can circle around and redistribute the star's heat, surface conditions might be liveable. Alternatively, it might be the worst cooked Christmas turkey ever.

There is also the issue we've no real idea what it takes to be 'habitable'. With only the Earth as a reference point for hosting life, it's impossible to tell which conditions are the most key. For example, does the planet need to be in a system of worlds to have water delivered to its surface? Is having a moon important for heating? What happens if the planet's orbit is not circular, but a bent ellipse? (And if anyone has seen the ESI number discussed, just bleach your brain.)

It's also worth remembering:

CAVEAT #5: We've seen similar planets.

Proxima Centauri b is not the nearest exoplanet to Earth in mass, nor is it the first found in the Habitable Zone. However...

CAVEAT #6: Yeah, OK, this is still big news...

Proxima Centauri b it is the nearest exoplanet that could exist [**] and that is the reason its discovery is incredibly exciting. The Kepler Space Telescope has given us fantastic statistics about the numeracy of planets and the architecture of these alien systems, but just a single radius measurement for the planet itself. To understand more about planet formation and the development of life, we desperately need details on these individual worlds. In particular, we need a rocky planet close enough to examine its atmosphere and begin to probe surface conditions. That candidate is very likely to be Proxima Centauri b.

Proxima Centauri b will never be "Earth-like", since its star is definitely not "sun-like". However, red dwarfs are the most common stars in our galactic neighbourhood and the planets around them some of the easiest to find. The science community has raged about whether such stars are the best targets (easy to find planets) or the worst (warm planets dangerously close to the star) to explore the prospect of habitability. Future observations of Proxima Centauri b will hopefully pour facts into a debate that has been speculation and models.

So what is next?

Absolutely ideally, we'd spot the planet crossing the star's surface. This is the 'Transit Technique' for planet detection: the planet blocks out a small amount of the star's light as it passes between the star and Earth on its orbit. The amount of light obscured and its duration gives a handle on the planet's diameter and confirms the orientation of its orbit. With that, we'd have an average density AND the possibility of glimpsing the contents of the planet's atmosphere as the starlight gleams around its edges.

Unfortunately, the probability of Proxima Centauri b actually transiting the star are low. Astronomers have been hopefully gazing at our nearest stars for so long, that we should have spotted the little blighter if it were acting as a periodic dimmer switch. This means that the planet either does not transit as viewed from Earth, or the transit is undetectable due to the frequent massive flares from the star.

However, another exciting prospect is direct imaging. Planets (fortunately) don't have the roaring inferno of stars, but they do emit some heat. If this can be detected, we'd actually be able to see the planet. Direct imaging is still in its infancy and normally only spots Jupiter-sized worlds far from the star. But the proximity of Proxima Centauri means we might just be able to catch a glimpse of it with our best telescopes now… or very soon with instruments such as the James Webb Space Telescope (Hubble's successor) and the ground-based European Extremely Large Telescope in the pipeline. Seeing the planet directly would also allow us to check out its atmosphere and potential surface environment. In my (OK, potentially slightly biased) view, this makes Proxima Centauri b THE most exciting target for these telescopes.

If Proxima Centauri b is so very close, could we visit?

CAVEAT #7: The closest possible exoplanet is still damn far.

Proxima Centauri is 4.24 light years away from Earth. The furthest humans have ever travelled is a loop around the moon: a teeny tiny 00000004 light years away. Voyager 1 —our furthest and currently fastest travelling space craft— would still take about 75,000 years to reach this system (and it's not pointed in the right direction).

That said… one of the craziest idea in the world recently got funding. Project 'Starshot', financed by Russian billionaire, Yuri Milner, is planning to develop a method to send a tiny probe to Alpha Centauri in 20 years. To describe this as a "long shot" is a joke on several levels. However, if it were to be workable, there is now the greatest of great destinations.

--

[*] I'm moving. Not to Proxima Centauri.

[**] Still orbiting a star

Good reads on Proxima Centauri b by planetary scientists:

Jonti Horner and Tanya Hill @ The Conversation

Sean Raymond @ Nautilus

Spotlight on Research is the research blog I author for Hokkaido University, highlighting different topics being studied at the University each month. These posts are published on the Hokkaido University website.

---

Your pen hovers above the list of names printed on the ballot slip. Do you choose your favourite candidate, or opt for your second choice because they stand a stronger chance of victory?

It is this thought process that drives the curiosity of Assistant Professor Kengo Kurosaka in the Graduate School of Economics.

“When I first started school, we often had to vote for choices in our homeroom,” he explains. “I felt at the time this was not always done fairly! Perhaps that inspired me.”

Assistant Professor Kengo Kurosaka

According to the theorem developed by American professors, Allan Gibbard and Mark Satterthwaite, it is impossible to design a reasonable voting system in which everyone will simply declare their first choice [1]. Instead, people base their selection not only on their own preferences, but on how they believe other voters will act.

Such ‘strategic voting’ can take a number of forms. Voters may opt to help secure a lower choice candidate if they believe their top choice has little chance of success. Alternatively, they may abstain from voting altogether, if they perceive their first choice has ample support and their contribution is not needed. Voters can also be influenced by the existence of future polls, when the topic they are voting on is part of a sequence of ballots for a single event.

One example of sequential balloting was the construction of the Shinkansen line on Japan’s southern island of Kyushu. The extension of the bullet train from Tokyo was performed in three sections: (1) Tokyo to Hakata, (2) Hakata to Shin Yatsushiro and (3) Shin Yatsushiro to Kagoshima. However, rather than voting for the segments sequentially as (1) -> (2) -> (3), the northern most segment (1) was first proposed, followed by segment (3) and then finally segment (2). Kengo can explain the choice for this seemingly illogical ordering by considering the effect of strategic voting.

The Shinkansen line through Kyushu

In his hypothesis, Kengo made three reasonable assumptions: Firstly, that the purpose of the Shinkansen line is the connection to Tokyo. Without this, residents would not gain any benefit from the line’s construction. The second assumption was that if the Shinkansen line was not built, the money would be spent on other worthwhile projects. Finally, that the order of the voting for each segment of line was known in advance and voted for individually by the Kyushu population.

If the voting occurred on segments running north to south, (1) -> (2) -> (3), Kengo argues that none of the Shinkansen line would have been constructed. The issue is that the people who have a connection to Tokyo have no reason to vote for the line extending further south. This means that once the line has been constructed as far as Shin Yatsushiro in segment (2), there would not be enough votes to secure the construction of the final extension to Kagoshima. The residents living in the Kagoshima area will anticipate this problem. They therefore will vote against the construction of line segments (1) and (2), knowing that these will never connect them to Tokyo. Without their support, segment (2) will also not get built. This in turn will be anticipated by the Shin Yatsushiro residents, who will then also not vote for segment (1), knowing that it cannot result in the capital connection. The result is that none of the three line segments secure enough votes to be constructed.

The only way around this, Kengo explains, is to vote on the middle section (2) last. The people living around Shin Yatsushiro know that unless they vote for segment (3), the Kagoshima population will not support their line in segment (2). They therefore vote for section (3), and then both they and the Kagaoshima population vote for the final middle piece, (2). Predicting the success of this strategy, everyone votes for segment (1). The people who do vote against the line are therefore the ones who genuinely do not care about the connection to Tokyo.

Kengo’s theory works well for explaining why the voting order for the Shinkansen line was the best way to create a fair ballot. However, it is hard to scientifically test universal predictions for such strategic voting, since it would be unethical to ask voters to reveal how they voted after a ballot. To circumnavigate this problem, Kengo has been designing laboratory experiments that mimic the voting process. His aim is to understand not just how sequential balloting affects results, but the overall impact of strategic voting.

In 8 sessions attended by 20 students, Kengo presents the same problem 40 times in succession. The students are divided into groups of five, denoted by the colours red, blue, yellow and green.

Voting experiment: students are assigned to a group and gain different point scores depending on which ‘candidate’ wins.

They are offered the chance to vote for one of four candidates, A, B, C or D. Students in the red group will receive 30 points if candidate A wins, 20 points if candidate B wins, 10 for candidate C and nothing if candidate D is selected. The other groups each have different combinations of these points, with candidate B being the 30 point favourite for the blue group and candidate C and D being the highest scorers respectively for the yellow and green groups. If each student simply voted for the candidate which would give them the highest point number, the poll would be a draw, with each candidate receiving five votes. But this is not what happens.

When confronted with the four options, the students opt for different schemes to attempt to maximise their point score. One choice is simply to vote for the highest point candidate. However, a red group student may instead vote for the 20 point candidate B, in the hope that this would break the tie and promote this candidate to win. While candidate B is not as good as the 30 point candidate A, it is preferable to either of the lower scoring candidates C or D winning.

Since the voting is conducted multiple times, students will also be influenced by their past decisions. If a vote for candidate A was successful, then the student is more likely to repeat this choice for the next round. Then there are the students who attempt to allow for all the above scenarios, and make their choice based on a more complex set of assumptions.

This type of poll mimics that used in political voting and interestingly, the outcome in that case is predicted by ‘Duverger’s Law’; a principal put forward by the French sociologist, Maurice Duverger. Duverger claimed that the case where a single winner is selected via majority vote strongly favours a two party system. So no matter how many candidates are in the poll initially, most of the votes will go to only two parties. To support a multi-party political system, a structure such as proportional representation needs to be introduced, where votes for non-winning candidates can still result in political influence.

Duverger’s Law appears to be supported by political situations such as those in the United States, but can it be explained by the strategic behaviours of the voters? By constructing the poll in the laboratory, Kengo can produce a simplified system where each voter’s first choice is clear and influenced only by their strategic selections. What he found is that the result followed Duverger’s Law with the four candidates reduced to two clear choices. Kengo is clear that this does not prove Duverger’s Law is definitely correct: the laboratory situation, with the voters drawn from a very specific demographic, does not necessarily translate accurately to the real world. However, if the principal had failed in the laboratory, it would have proved that strategic voting alone cannot be the only process at work.

An overall goal for Kengo’s work is to predict the effect of small rule changes in the voting process, such as the order of voting for segments of a Shinkansen line or the ability to vote for multiple candidates in an election. This allows such adjustments to be assessed and a look at who would most likely benefit. Such information can be used to make a system fairer or indeed, influence the result.

So next time you are completing a ballot paper, remember the complex calculation that your decision is about to join.

—

[1] The word ‘reasonable’ here is loaded with official properties that the voting system must have for the Gibbard-Satterthwaite theorem to apply. However, these are standard in most situations.

Journal Juice: summary of the research paper, 'On the Inclination and Habitability of the HD 10180 System', Kane & Gelino, 2014. (Shared also on Google+)

This animation shows an artist's impression of the remarkable planetary system around the Sun-like star HD 10180. Observations with the HARPS spectrograph, attached to ESO's 3.6-metre telescope at La Silla, Chile, have revealed the definite presence of five planets and evidence for two more in orbit around this star.

HD 10180 is a sun-like star with a truck load of planets. The exact number in this ‘truck load’ however, is slightly more uncertain. The problem is that we haven’t seen these brave new worlds cross directly front of their star, but have found them by looking at the wobble they produce in the star’s motion. As a planet orbits its stellar parent, its gravity pulls on the star to make it move in a small, regular oscillation as the planet alternates from one side of the star to the other. By fitting this periodic wobble, scientists can estimate the planet’s mass and location.

The problem is that when there is a truck load of planets, there are multiple possible fits to the star’s motion, and each of these yield different answers for the properties of the planetary system.

In 2011, a paper by Lovis et al. determined that there were 7 planets orbiting HD 10180, labelled HD 10180b through to HD 10180h. However, they were uncertain about the existence of the innermost ‘b’ planet and their model made a number of inflexible assumptions about the planet motions.

In this paper, authors Kane & Gelino revisit the system. One of the constraints they remove from the Lovis model is the insistence that a number of the planets sit on circular orbits. Rather, the authors allow the planets to potentially all follow squished elliptical paths around their star. In our own Solar System, the Earth has an almost circular orbit around the sun, but Pluto does not, moving in a squashed circle of an orbit.

The result of this new model is a six planet system, where the dubious planet ‘b’ is removed. Two other planets, ‘g’ and ‘h’, also have their orbits changed, with ‘g’ now moving on a more elliptical path. This is particularly interesting since planet ‘g’ was thought to be in the habitable zone: the region around the star where the radiation levels would be right to support liquid water. How does g’s new orbit change things?

Before charging off in that direction, the authors ask a second important question: what is the inclination of the planetary system? Are we looking at it edge-on (inclination angle, i = 90 degrees), face-on (i = 0) or something in between (i = ??) ?

This question is important since it affects the estimated mass of the planets. The more face-on the planetary system is with respect to our view on Earth, the larger the mass of the planets must be to produce the observed star wobble. Unfortunately, inclination is devilishly hard to determine without being able to watch the planets pass in front of their star.

While it was not possible to view the inclination directly, the authors ran simulations of the planet system’s evolution to test out different options. Since the planets’ masses change with the assumed inclination, the gravitational interactions between the worlds also changes. Some of these combinations are not stable, kicking a planet out of its orbit. Unless we got very lucky with regards to when we observed the planets, the chances are these unstable configurations are not correct. This allows scientists to limit the inclination possibilities. The simulations suggested that an angle less than 20 degrees was not looking at all good for planet ‘d’.

With the new model and a set of different inclinations, the authors then returned to the most exciting (from the point of view of a new Starbucks chains) planet ‘g’. Even with its new squished-circle orbit, planet ‘g’ spends 89% of its time in the main habitable zone. The remaining 11% is within the more optimistic inner edge for the habitable zone, whereby the temperatures might be able to support water for a limited epoch of the planet’s history. This is a pretty promising orbit, since there is evidence that a planet’s atmosphere might be able to redistribute heat to save it during the time it spends in a rather too toasty location.

However, planet ‘g’ has other problems.

If the planet system is edge-on, the mass of planet ‘g’ is 23.3 x Earth’s mass with a rather massive radius of 0.5 x Jupiter. Tilting round to face-on at i = 10 degrees (bye bye planet ‘d’) increases that still more to 134 Earth masses and the same radii as Jupiter.

All options then, suggest promising planet ‘g’ is not a rocky world like the Earth, but a gas giant. The best hope for a liveable location would therefore be an Ewok-invested moon. Yet, even here the authors have doubts. Conditions on a moon are affected both by the central star and also heat and gravitational tides from the planet itself. Normally, these are small enough to forget compared to the star’s influence, but with the planet skirting so close to the star for 11% of its orbit, this may be sufficient to give a moon some serious dehydration problems.

The upshot is that the inclination of the planetary system’s orbit is vitally important for determining the masses of the member planets and that HD 10180g is worth watching for moons, but probably not ready for a Butlins holiday resort.

Spotlight on Research is the research blog I author for Hokkaido University, highlighting different topics being studied at the University each month. These posts are published on the Hokkaido University website.

Professors Bi-Chang Chen and Peilin Chen describe their research. Left: (anti-clock-wise from bottom) myself, Professor Peilin Chen, Professor Bi-Chang Chen and Professor Nemoto. Right: Professor Peilin Chen (left) and Bi-Chang Chen.

“Everyone wants to see things smaller, faster, for longer and on a bigger scale!” Professor Bi-Chang Chen exclaims.

It sounds like an impossible demand, but Bi-Chang may have just the tool for the job.

Professor Bi-Chang Chen and his colleague, Professor Peilin Chen, are from Taiwan’s Academia Sinica. Their visit to Hokudai this month was part of a collaboration with Professors Tomomi Nemoto and Tamiki Komatsuzaki in the Research Institute for Electronic Science. The excitement is Bi-Chang’s microscope design: a revolutionary technique that can take images so fast and so gently, it can be used to study living cells.

The building blocks of all living plants and animals are their biological cells. However, many aspects of how these essential life-units work remains a mystery, since we have never been able to follow individual cells as they evolve.

The problem is that cells are changing all the time. Like photographing a fast moving runner, an image of a living cell must be taken very quickly or it will blur. However, while a photographer would use a camera flash to capture a runner, increasing the intensity of light on the cells knocks them dead.

Bi-Chang’s microscope avoids these problems. The first fix is to reduce unnecessary light on the parts of the cell not being imaged. When you look down a traditional microscope, the lens is adjusted to focus at a given distance, allowing you to see different depths in the cell clearly. A beam of light then travels through the lens parallel to your eye and illuminates the sample. The problem with this system is that if you are focusing on the middle of a cell, the front and back of the cell also get illuminated. This both increases the blur in the image and also drenches those extra parts of the cell in damaging light. With Bi-Chang’s microscope, the light is sent at right-angles to your eye, illuminating only the layer of the cell at the depth where your microscope has focused.

This is clever, but it is not enough for the resolution Bi-Chang had in mind. The shape of a normal light beam is known as a ‘Gaussian beam’ and is actually too fat to see inside a cell. It is like trying to discover the shape of peanuts by poking in the bag with a hockey stick. Bi-Chang therefore changed the shape of the light so it became a ‘Bessel beam’. A cross-section of a Bessel beam looks like a bullseye dart board: it has a narrow bright centre surrounded by dimmer rings. The central region is like a thin chopstick and perfect for probing the inside of a cell, but the outer rings still swamp the cell with extra light.

Bi-Chang fixed this by using not one Bessel beam, but around a hundred. Where the beams overlap, the resultant light is found by adding the beams together. Since light is a wave with peaks and troughs, Bi-Chang was able to arrange the beams so the outer rings cancelled one another, a process familiar to physics students as ‘destructive interference’. This left only the central part of the beams which could combine to illuminate a thin layer of the cell at the focal depth of the microscope.

Not only does this produce a sharp image with minimal unnecessary light damage, but the combination of many beams allows a wide region of the sample to be imaged at one time. A traditional microscope must move point-by-point over the sample, taking images that will all be at slightly different times. Bi-Chang’s technique can take a snap-shot at one time of a plane covering a much wider area.

To his surprise, Bi-Chang also found that this lattice of light beams (known as a lattice light sheet microscope) made his cells healthier. In splitting the light into multiple beams, the intensity of the light in each region was reduced, causing less damage to the cells.

The net result is a microscope that can look inside the cells and leave them unharmed, allowing the microscope to take repeated images of the cell changing and dividing. By rapidly imaging each layer, a three dimensional view of the cell can be put together. Such a dynamical view of a living cell has never been achieved before, and opens the door to a far more detailed study of the fundamental working of cells. Applications include understanding the triggering of cell divisions in cancers, how cells react to external senses and message passing in the brain.

“We don’t know how powerful this technique is yet,” explains Peilin Chen. “We don’t know how far we can go.”

This is a question Tomomi Nemoto’s group are eager to help with. In collaboration with Hokudai, Bi-Chang and Peilin want to see if they can scale up their current view of a few cells to a larger system.

“We’d like to extend the field of view and if possible, look at a mouse brain and the neuron activity,” Bi-Chang explains. “That is our next goal!”

It is an exciting possibility and one that may be supported by a new grant Hokudai has received from the Japanese Government. Last summer, Hokudai became part of the ‘Top Global University Project’, with a ten year annual grant to increase internationalisation at the university. Part of this budget will be used in research collaborations to allow ideas such as Bi-Chang’s microscope to be combined with projects that can put this new technology to use. Students at Hokudai will also get the opportunity to take courses offered by guest lecturers from around the world. These are connections that will make 2015 the best year yet for research.

Let’s play a game. I am going to flip a coin. If you guess correctly which side it will land, I will give you $1. If you get it wrong, you will give me $1. We will play this game 100 times.

Since we are using my coin, it may be weighted to preferentially land on one side. I am not going to tell you which way the bias works, but after 20 - 30 flips, you will notice that the coin lands with ‘heads’ facing up three times out of four.

What are you going to do?

This was the question posed by Professor Andrew Lo from the Massachusetts Institute of Technology, during his talk last week for the Origins Institute Public Lecture Series. Lo’s background is in economics. It is a research field with a problem.

In common with subjects such as anthropology, archaeology, biology, history and psychology, economics is about understanding human behaviour. While the findings in these areas may not always be relevant to one another, they should not be contradictory. For example, studying mating behaviours in anthropology should be consistent with the biological mechanics of human reproduction. The problem is that economics is full of contradictions.

In the example of the weighted coin, the financially sound solution made by a hypothetical ’Homo-economicus’ would be to always choose ‘heads’. This would correctly predict the coin toss 75% of the time and net a tidy profit. However, when this choice is given to real Homo-sapiens, people randomise their choices. In fact, humans match the probability of the coin weighting, selecting heads 75% of the time and tails 25% of the time. Moreover, if the coin is surreptitiously switched during the game to one with a weighting for 60% heads, 40% tails, then the contestants change their guesses to also select heads 60% of the time.

Economics tells us this is not the choice we should be making. So why do we do it?

This selection choice holds in even much more serious situations. During World War II, the U.S. Army Airforce organised bombing missions over Germany. These were incredibly dangerous flights with two main risks: the plane could receive a direct hit and be shot down, or it could be pelted with shrapnel from anti-aircraft artillery. In the former case, the best chance of survival would be a parachute. In the latter, it would be safest to wear a metal plated flak jacket. Due to the weight of the flak jacket, it was not possible for pilots to select both pieces of equipment. The army therefore gave them a choice of items.

The chances of being hit by shrapnel were roughly three times higher than being shot down. Despite this, pilots selected the flak jackets only 75% of the time. When the army realised this was delivering a high death toll to their pilots, they tried to mandate the flak jacket selection. However, the pilots then refused to volunteer for such missions, claiming that if they were taking their lives in their own hands, they ought to be able to pick the kit they wanted.

What is perhaps even stranger is that humans are not the only species that does this probability matching. Similar behaviour is seen in ants, fish, pidgeons, mice and any other species that can select between option A and B. This can be tested on fish surprisingly simply. If you feed a tank of fish from the left-hand corner 75% of the time and from the right-hand corner 25% of the time, then 25% of the fish will swim hopefully to the right side of the tank when it is time to be fed.

Lo’s feeling was that this common probability matching trait must have stemmed from a survival advantage. He therefore turned from economics to evolution.

Lo admitted that crossing from one field of research to another can be daunt-ing. He humourously recalled an attempt by his university to bring together researchers in different areas during an organised dinner.

“We were speaking different languages! I never went back!” he told us.

Despite this disastrous first attempt, Lo has found himself drawn into projects that span fields.

“Interdisciplinary work comes about naturally when you’re trying to solve a problem,” he explained. “Problems don’t care about traditional field boundaries.”

To explore the possible survival advantages of a probability matching instinct, Lo considered a simple choice for a creature: where to build a nest?

In Lo’s system there are two choices for nest location: a valley floor by a river or high up on a plateau. While it is sunny, the valley floor provides the best choice. It is shaded with a water source, allowing the creature to successfully breed. Meanwhile, the plateau is too hot and any off-spring will die. However, when it rains, the situation is reversed: the valley floods and kills all the creatures, while the plateau provides a safe nest.